Aviation became a reality in the early 20th century, but it took 20 years for the right safety precautions to allow for widespread acceptance of air travel. Today, the future of fully autonomous vehicles is similarly cloudy, in large part due to safety concerns.

To accelerate this schedule, PhD student Heng “Hank” Yang and his team developed the first set of “certifiable perception” algorithms that could help protect the next generation of self-driving vehicles – and the vehicles they use to drive them Share road.

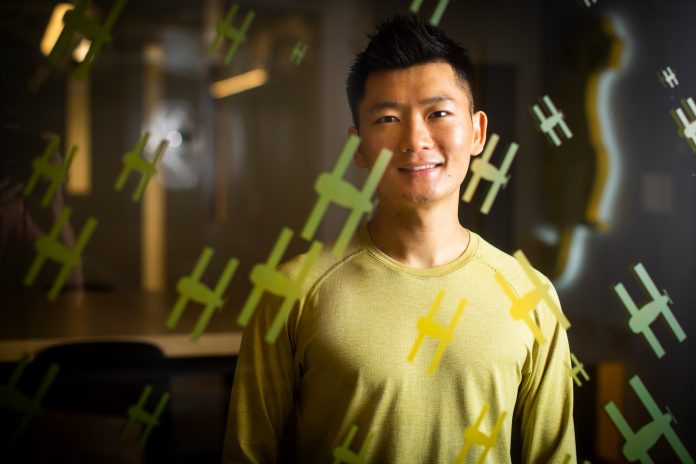

Although Yang is a rising star in his field today, it was many years before he decided to explore robotics and autonomous systems. Growing up in the Chinese province of Jiangsu, he completed his bachelor’s degree with honors from Tsinghua University. He spent his college days studying everything from honey bees to cell mechanics. “My curiosity made me study a lot of things. Over time, I’ve turned more and more to mechanical engineering because it overlaps with so many other areas, ”says Yang.

Yang received a Masters in Mechanical Engineering from MIT, where he worked on improving an ultrasound imaging system to track liver fibrosis. To achieve his technical goal, Yang decided to take a course on designing algorithms to control robots.

“The course also covered mathematical optimization, which involves adapting abstract formulas to model almost everything in the world,” says Yang. “I learned a neat solution to tie up the loose ends of my thesis. I was amazed at how powerful computations can be to optimize design. From then on I knew that it was the right field for me to explore next. “

Certified Accuracy Algorithms

Yang is now a PhD student in the Information and Decision-Making Systems Laboratory (LIDS), where he is working with Luca Carlone, the Leonardo Career Development Associate Professor in Engineering, on the challenge of certifiable perception. When robots sense their surroundings, they need to use algorithms to make estimates of the surroundings and their location. “But these perception algorithms are designed for speed without the robot being able to understand its surroundings properly,” says Yang. “This is one of the biggest existing problems. Our laboratory is working on developing “certified” algorithms that can tell you whether these estimates are correct. “

For example, robot perception begins with the robot taking a picture, such as a self-driving car taking a snapshot of an approaching car. The image goes through a machine learning system called a neural network that generates key points within the image via the mirrors, wheels, doors, etc. of the approaching car. From there, lines are drawn trying to trace the recognized key points on the 2D car image to the labeled 3D key points in a 3D car model. “We then have to solve an optimization problem to rotate and translate the 3D model to align with the key points in the image,” says Yang. “This 3D model will help the robot to understand the real environment.”

Each line traced must be analyzed to see if it produced a correct match. Since there are many key points that could be misassigned (for example, the neural network could mistakenly recognize a mirror as a door handle), this problem is “non-convex” and difficult to solve. Yang says that his team’s algorithm, which won the Best Paper Award in Robot Vision at the International Conference on Robotics and Automation (ICRA), smooths the non-convex problem into convex and finds successful matches. “If the match isn’t right, our algorithm knows how to keep trying until it finds the best solution, the so-called global minimum. If there are no better solutions, a certificate is issued, ”he explains.

“These certifiable algorithms have enormous potential, because tools such as self-driving cars have to be robust and trustworthy. Our goal is that a driver receives a warning to take over the steering wheel if the perception system has failed. ”

Adaptation of your model to different cars

When comparing the 2D image with the 3D model, there is an assumption that the 3D model matches the identified vehicle type. But what if the car pictured has a shape that the robot has never seen in its library? “We now have to estimate both the position of the car and reconstruct the shape of the model,” says Yang.

The team found a way to meet this challenge. The 3D model is adapted to the 2D image through a linear combination of previously identified vehicles. For example, the model could switch from an Audi to a Hyundai, as it registers the correct construction of the actual car. Knowing the dimensions of the approaching car is key to avoiding collisions. This work earned Yang and his team a Best Paper Award finalist at the Robotics: Science and Systems (RSS) Conference, where Yang was also recognized as an RSS Pioneer.

In addition to presenting at international conferences, Yang enjoys discussing and sharing his research with the public. He recently shared his work on certifiable perception during MIT’s first public research SLAM showcase. He also co-organized the first LIDS virtual student conference along with industry leaders. His favorite lectures focused on ways to combine theory and practice, such as: “What I’ve got left is how he really emphasized what these rigorous analytical tools can do for the good of society,” says Yang.

Yang plans to continue researching challenging issues surrounding safe and trustworthy autonomy by pursuing a career in academia. His dream of becoming a professor is also fueled by his love of mentoring others that he enjoys doing in Carlone’s lab. He hopes his future work will lead to more discoveries that will help protect people’s lives. “I think many are realizing that the existing solutions we have to promote human security are not enough,” says Yang. “In order to achieve trustworthy autonomy, it is time we used various tools to develop the next generation of secure perception algorithms.”

“There always has to be a failover because none of our man-made systems can be perfect. I believe that it takes both the power of rigorous theory and calculation to revolutionize what we can successfully reveal to the public. “